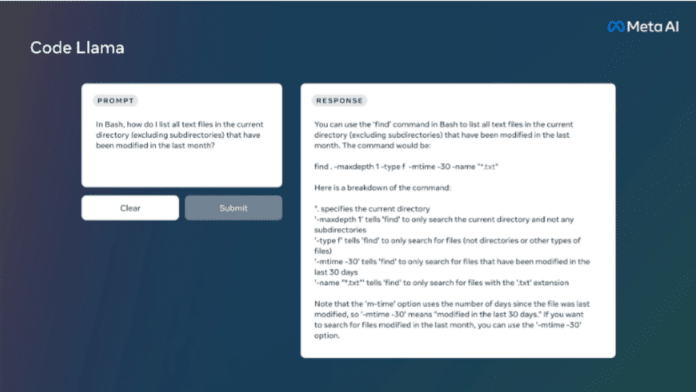

The tool is meant for publicly available large language models (LLMs) on coding tasks.

Further, it can help with tasks like code completion and debugging.

Meta is releasing three sizes of Code Llama with 7 billion, 13 billion, and 34 billion parameters.

Each of these models is trained with 500 billion tokens of code and code-related data.

This means they can support tasks like code completion right out of the box.

These three models address a range of serving and latency requirements.

On the other hand, the 34B model offers the best overall results and allows for better coding assistance.

Programmers are already using LLMs to assist in a variety of tasks.

Publicly available, code-specific models can facilitate the development of new technologies that improve peoples lives.

source: www.techworm.net